Functions | |

| std::pair< carma_perception_msgs::msg::PredictedState, double > | composePredictedState (const tf2::Vector3 &curr_pt, const tf2::Vector3 &prev_pt, const rclcpp::Time &prev_time_stamp, const rclcpp::Time &curr_time_stamp, double prev_yaw) |

| Composes a PredictedState message form a provided current point and previous point. It calculates the speed from these points using mobility_path_time_step. More... | |

| void | calculateAngVelocityOfPredictedStates (carma_perception_msgs::msg::ExternalObject &object) |

| Helper function to fill in the angular velocity of the external object. More... | |

| double | getYawFromQuaternionMsg (const geometry_msgs::msg::Quaternion &quaternion) |

| Gets the yaw angle in degrees described by the provided quaternion. More... | |

| tf2::Vector3 | transform_to_map_frame (const tf2::Vector3 &ecef_point, const lanelet::projection::LocalFrameProjector &map_projector) |

| Transforms ecef point to map frame using internally saved map transform. More... | |

| std::vector< geometry_msgs::msg::Pose > | sample_2d_path_from_radius (const geometry_msgs::msg::Pose &pose, double velocity, double radius_of_curvature, double period, double step_size) |

| std::vector< geometry_msgs::msg::Pose > | sample_2d_linear_motion (const geometry_msgs::msg::Pose &pose, double velocity, double period, double step_size) |

| geometry_msgs::msg::PoseWithCovariance | pose_from_gnss (const lanelet::projection::LocalFrameProjector &projector, const tf2::Quaternion &ned_in_map_rotation, const lanelet::GPSPoint &gps_point, const double &heading, const double lat_variance, const double lon_variance, const double heading_variance) |

| std::vector< carma_perception_msgs::msg::PredictedState > | predicted_poses_to_predicted_state (const std::vector< geometry_msgs::msg::Pose > &poses, double constant_velocity, const rclcpp::Time &start_time, const rclcpp::Duration &step_size, const std::string &frame, double initial_pose_confidence, double initial_vel_confidence) |

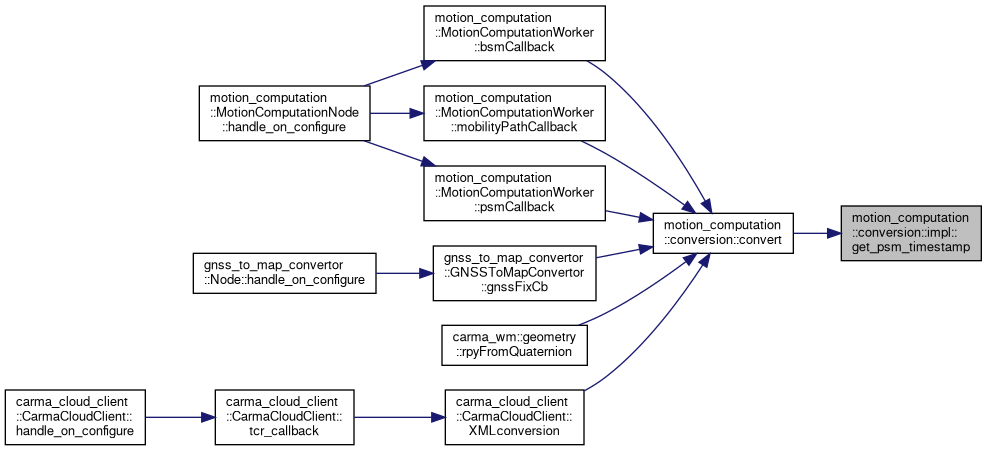

| rclcpp::Time | get_psm_timestamp (const carma_v2x_msgs::msg::PSM &in_msg, rclcpp::Clock::SharedPtr clock) |

Function Documentation

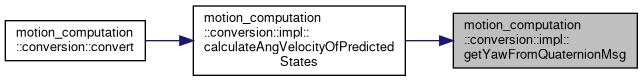

◆ calculateAngVelocityOfPredictedStates()

| void motion_computation::conversion::impl::calculateAngVelocityOfPredictedStates | ( | carma_perception_msgs::msg::ExternalObject & | object | ) |

Helper function to fill in the angular velocity of the external object.

- Parameters

-

ExternalObject to fill in its angular velocities

Definition at line 212 of file mobility_path_to_external_object.cpp.

References getYawFromQuaternionMsg().

Referenced by motion_computation::conversion::convert().

◆ composePredictedState()

| std::pair< carma_perception_msgs::msg::PredictedState, double > motion_computation::conversion::impl::composePredictedState | ( | const tf2::Vector3 & | curr_pt, |

| const tf2::Vector3 & | prev_pt, | ||

| const rclcpp::Time & | prev_time_stamp, | ||

| const rclcpp::Time & | curr_time_stamp, | ||

| double | prev_yaw | ||

| ) |

Composes a PredictedState message form a provided current point and previous point. It calculates the speed from these points using mobility_path_time_step.

- Parameters

-

curr_pt current point prev_pt prev_pt. this point is recorded in the state prev_time_stamp prev_pt's time stamp. This time is recorded in the state curr_time_stamp The timestamp of the second point when used to compute velocity at prev point prev_yaw A previous yaw value in radians to use if the two provided points are equivalent

- Returns

- std::pair<carma_perception_msgs::msg::PredictedState,double> where the first element is the prediction including linear velocity, last_time, orientation filled in and the second element is the yaw in radians used to compute the orientation

Definition at line 167 of file mobility_path_to_external_object.cpp.

Referenced by motion_computation::conversion::convert().

◆ get_psm_timestamp()

| rclcpp::Time motion_computation::conversion::impl::get_psm_timestamp | ( | const carma_v2x_msgs::msg::PSM & | in_msg, |

| rclcpp::Clock::SharedPtr | clock | ||

| ) |

Definition at line 475 of file psm_to_external_object_convertor.cpp.

Referenced by motion_computation::conversion::convert().

◆ getYawFromQuaternionMsg()

| double motion_computation::conversion::impl::getYawFromQuaternionMsg | ( | const geometry_msgs::msg::Quaternion & | quaternion | ) |

Gets the yaw angle in degrees described by the provided quaternion.

- Parameters

-

quaternion The quaternion to extract yaw from

- Returns

- The yaw in degrees

Definition at line 238 of file mobility_path_to_external_object.cpp.

Referenced by calculateAngVelocityOfPredictedStates().

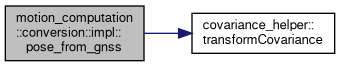

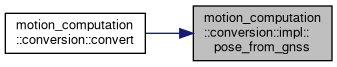

◆ pose_from_gnss()

| geometry_msgs::msg::PoseWithCovariance motion_computation::conversion::impl::pose_from_gnss | ( | const lanelet::projection::LocalFrameProjector & | projector, |

| const tf2::Quaternion & | ned_in_map_rotation, | ||

| const lanelet::GPSPoint & | gps_point, | ||

| const double & | heading, | ||

| const double | lat_variance, | ||

| const double | lon_variance, | ||

| const double | heading_variance | ||

| ) |

Convert the position information into the map frame using the proj library

Convert the position information into the map frame using the proj library

Definition at line 338 of file psm_to_external_object_convertor.cpp.

References covariance_helper::transformCovariance().

Referenced by motion_computation::conversion::convert().

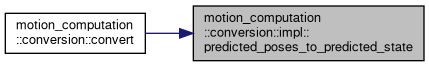

◆ predicted_poses_to_predicted_state()

| std::vector< carma_perception_msgs::msg::PredictedState > motion_computation::conversion::impl::predicted_poses_to_predicted_state | ( | const std::vector< geometry_msgs::msg::Pose > & | poses, |

| double | constant_velocity, | ||

| const rclcpp::Time & | start_time, | ||

| const rclcpp::Duration & | step_size, | ||

| const std::string & | frame, | ||

| double | initial_pose_confidence, | ||

| double | initial_vel_confidence | ||

| ) |

Definition at line 442 of file psm_to_external_object_convertor.cpp.

Referenced by motion_computation::conversion::convert().

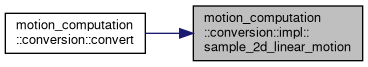

◆ sample_2d_linear_motion()

| std::vector< geometry_msgs::msg::Pose > motion_computation::conversion::impl::sample_2d_linear_motion | ( | const geometry_msgs::msg::Pose & | pose, |

| double | velocity, | ||

| double | period, | ||

| double | step_size | ||

| ) |

Definition at line 294 of file psm_to_external_object_convertor.cpp.

Referenced by motion_computation::conversion::convert().

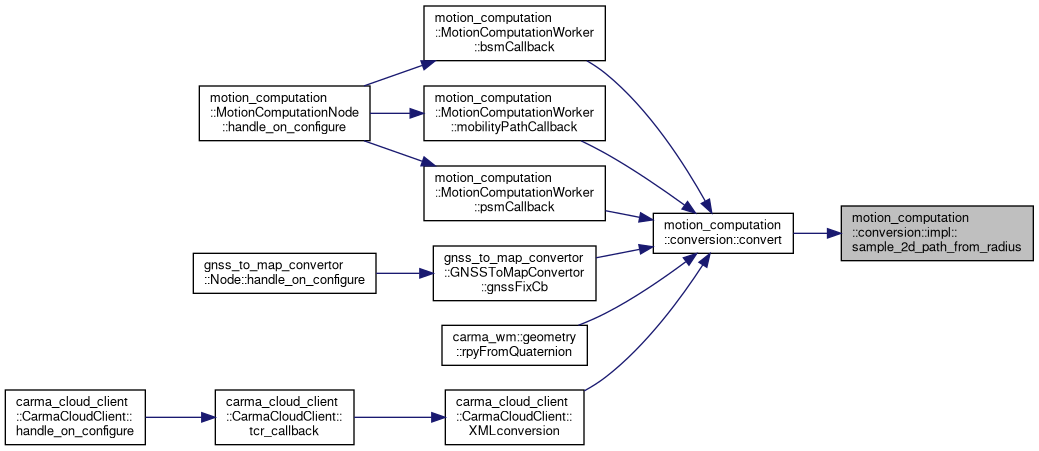

◆ sample_2d_path_from_radius()

| std::vector< geometry_msgs::msg::Pose > motion_computation::conversion::impl::sample_2d_path_from_radius | ( | const geometry_msgs::msg::Pose & | pose, |

| double | velocity, | ||

| double | radius_of_curvature, | ||

| double | period, | ||

| double | step_size | ||

| ) |

Definition at line 237 of file psm_to_external_object_convertor.cpp.

References osm_transform::x, and osm_transform::y.

Referenced by motion_computation::conversion::convert().

◆ transform_to_map_frame()

| tf2::Vector3 motion_computation::conversion::impl::transform_to_map_frame | ( | const tf2::Vector3 & | ecef_point, |

| const lanelet::projection::LocalFrameProjector & | map_projector | ||

| ) |

Transforms ecef point to map frame using internally saved map transform.

- Parameters

-

ecef_point ecef_point to transform map_projector Projector used for frame conversion

- Returns

- point in map

Definition at line 254 of file mobility_path_to_external_object.cpp.

Referenced by motion_computation::conversion::convert().