Namespaces | |

| namespace | impl |

Functions | |

| void | convert (const carma_v2x_msgs::msg::PSM &in_msg, carma_perception_msgs::msg::ExternalObject &out_msg, const std::string &map_frame_id, double pred_period, double pred_step_size, const lanelet::projection::LocalFrameProjector &map_projector, const tf2::Quaternion &ned_in_map_rotation, rclcpp::node_interfaces::NodeClockInterface::SharedPtr node_clock) |

| void | convert (const carma_v2x_msgs::msg::BSM &in_msg, carma_perception_msgs::msg::ExternalObject &out_msg, const std::string &map_frame_id, double pred_period, double pred_step_size, const lanelet::projection::LocalFrameProjector &map_projector, tf2::Quaternion ned_in_map_rotation) |

| void | convert (const carma_v2x_msgs::msg::MobilityPath &in_msg, carma_perception_msgs::msg::ExternalObject &out_msg, const lanelet::projection::LocalFrameProjector &map_projector) |

Function Documentation

◆ convert() [1/3]

| void motion_computation::conversion::convert | ( | const carma_v2x_msgs::msg::BSM & | in_msg, |

| carma_perception_msgs::msg::ExternalObject & | out_msg, | ||

| const std::string & | map_frame_id, | ||

| double | pred_period, | ||

| double | pred_step_size, | ||

| const lanelet::projection::LocalFrameProjector & | map_projector, | ||

| tf2::Quaternion | ned_in_map_rotation | ||

| ) |

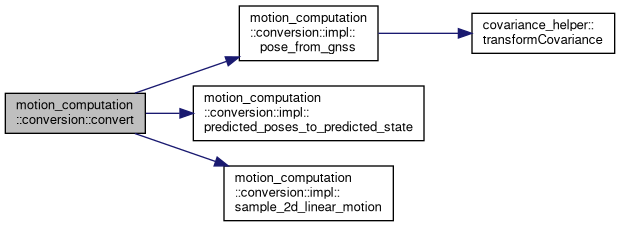

Definition at line 28 of file bsm_to_external_object_convertor.cpp.

References process_bag::i, motion_computation::conversion::impl::pose_from_gnss(), motion_computation::conversion::impl::predicted_poses_to_predicted_state(), and motion_computation::conversion::impl::sample_2d_linear_motion().

◆ convert() [2/3]

| void motion_computation::conversion::convert | ( | const carma_v2x_msgs::msg::MobilityPath & | in_msg, |

| carma_perception_msgs::msg::ExternalObject & | out_msg, | ||

| const lanelet::projection::LocalFrameProjector & | map_projector | ||

| ) |

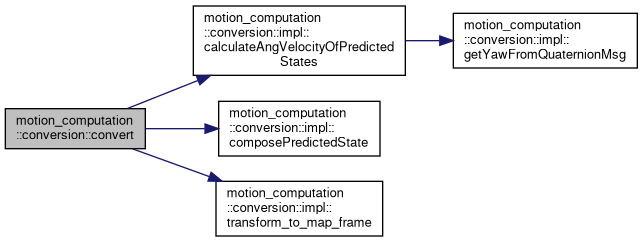

Definition at line 31 of file mobility_path_to_external_object.cpp.

References motion_computation::conversion::impl::calculateAngVelocityOfPredictedStates(), motion_computation::conversion::impl::composePredictedState(), process_bag::i, and motion_computation::conversion::impl::transform_to_map_frame().

◆ convert() [3/3]

| void motion_computation::conversion::convert | ( | const carma_v2x_msgs::msg::PSM & | in_msg, |

| carma_perception_msgs::msg::ExternalObject & | out_msg, | ||

| const std::string & | map_frame_id, | ||

| double | pred_period, | ||

| double | pred_step_size, | ||

| const lanelet::projection::LocalFrameProjector & | map_projector, | ||

| const tf2::Quaternion & | ned_in_map_rotation, | ||

| rclcpp::node_interfaces::NodeClockInterface::SharedPtr | node_clock | ||

| ) |

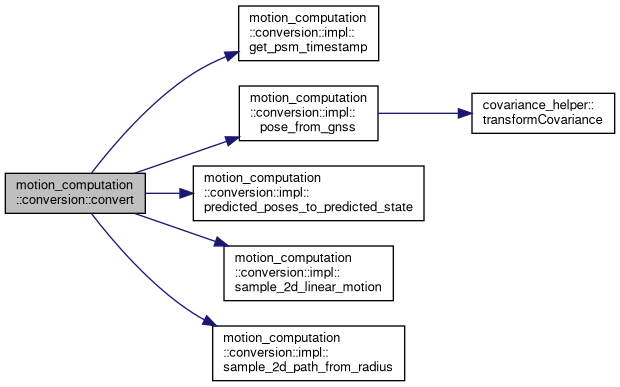

Definition at line 38 of file psm_to_external_object_convertor.cpp.

References motion_computation::conversion::impl::get_psm_timestamp(), process_bag::i, motion_computation::conversion::impl::pose_from_gnss(), motion_computation::conversion::impl::predicted_poses_to_predicted_state(), motion_computation::conversion::impl::sample_2d_linear_motion(), and motion_computation::conversion::impl::sample_2d_path_from_radius().

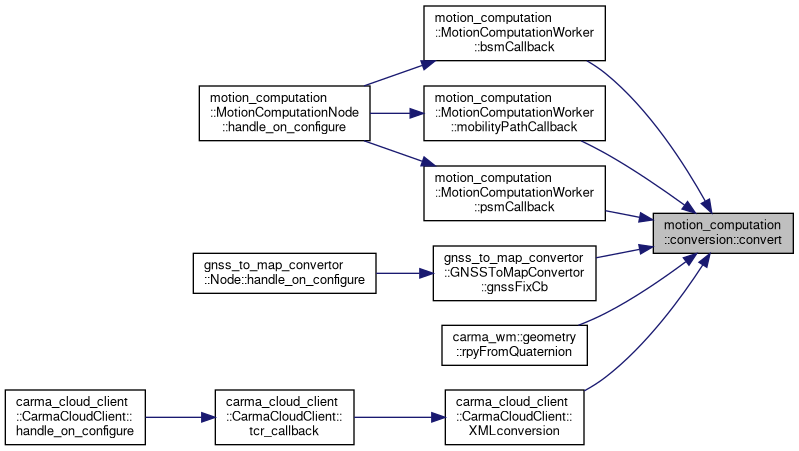

Referenced by motion_computation::MotionComputationWorker::bsmCallback(), gnss_to_map_convertor::GNSSToMapConvertor::gnssFixCb(), motion_computation::MotionComputationWorker::mobilityPathCallback(), motion_computation::MotionComputationWorker::psmCallback(), carma_wm::geometry::rpyFromQuaternion(), and carma_cloud_client::CarmaCloudClient::XMLconversion().